Top AI Governance Tools to Master Ethical AI in 2025

Introduction

Artificial Intelligence is changing the world around us—reshaping industries, streamlining decisions, and unlocking capabilities we once thought impossible. But with that incredible power comes a serious question: How do we keep AI ethical, transparent, and accountable?

That’s where AI Governance Tools come into play. These tools aren’t just fancy dashboards or checklists—they’re the digital frameworks that help organizations build Responsible AI. Think of them as the guardrails that keep powerful algorithms from going off-track. Whether it’s ensuring fairness in hiring algorithms, maintaining transparency in financial AI models, or staying compliant with global data regulations, these tools are at the heart of doing AI correctly.

In an age where trust is everything, AI Governance Tools give organizations the ability to monitor, audit, and manage their AI systems responsibly. They help reduce bias, track model decisions, ensure AI compliance, and align with ever-evolving ethical standards.

In this guide, we’ll dive deep into what AI governance tools are, how they work behind the scenes, explore leading platforms, and explain why these tools are no longer optional—they’re essential for anyone deploying artificial intelligence in the real world.

What Is AI Governance?

Understanding AI Governance in 2025

In 2025, AI Governance isn’t just a buzzword—it’s a necessity. As artificial intelligence weaves deeper into everything from healthcare to banking to hiring, the demand for responsible oversight has skyrocketed.

But let’s clarify: what exactly is AI governance?

At its core, AI governance refers to the frameworks, policies, and tools that ensure AI systems are developed, deployed, and maintained responsibly. It’s not the same as MLOps (which focuses on managing machine learning operations) or AI ethics (the broader philosophical guidelines about right and wrong in AI). Instead, AI governance acts as the bridge—putting ethical principles into practice and making sure technical processes stay within legal, safe, and fair boundaries.

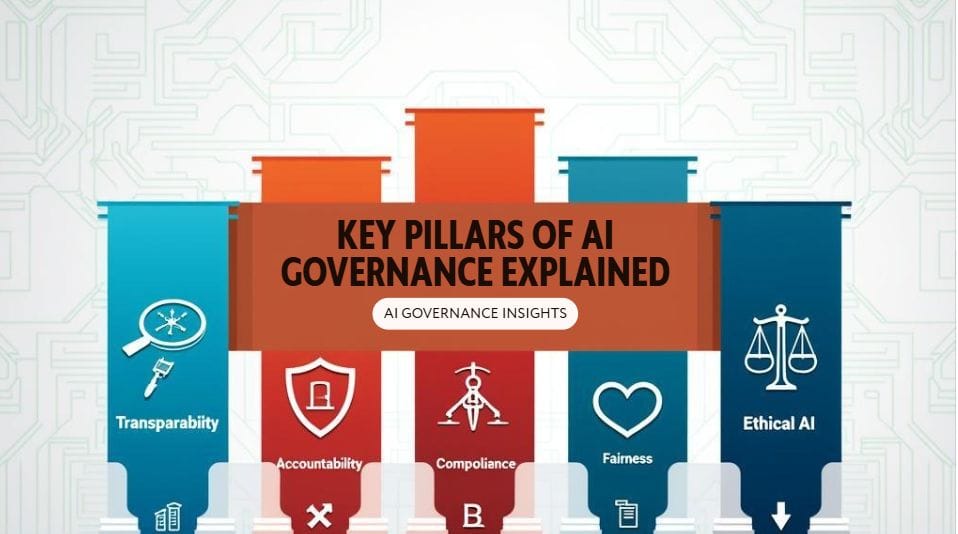

So, what holds AI governance together? There are four key pillars:

- Transparency – Can you explain how an AI system reached its decision?

- Accountability – Who is responsible when something goes wrong?

- Compliance – Does the AI meet local and global regulations (like GDPR, HIPAA, or the EU AI Act)?

- Fairness – Is the model treating all users equally, without hidden bias?

These principles matter more than ever, especially in regulated industries like finance, healthcare, and government. One misstep can lead to serious consequences.

Consider the infamous case of biased hiring algorithms used by major corporations. These systems, trained on past data, began rejecting qualified candidates based on gender or race. The fallout wasn’t just public backlash—it was legal scrutiny and reputational damage. That’s the cost of weak governance.

In today’s AI-driven landscape, governance isn’t optional—it’s your organization’s insurance policy for trust, safety, and sustainability.

Why Do AI Governance Tools Matter?

The Risks of Uncontrolled AI

As artificial intelligence becomes deeply embedded in business decisions, customer experiences, and even public policy, the risks of uncontrolled AI are growing fast. That’s why AI Governance Tools are no longer a luxury; they’re a lifeline.

Let’s start with the regulatory landscape. Governments around the world are no longer just watching—they’re acting. The EU AI Act is set to become one of the most comprehensive frameworks for regulating AI, classifying systems by risk level and enforcing strict penalties for violations. GDPR continues to demand transparency and data privacy, and in the U.S., states like California are introducing AI-specific legislation to protect consumers.

If your AI system is making decisions—like approving loans, screening job applications, or triaging patients—you must be able to explain how and why it made those choices. Without explainability and accountability, you’re exposing your organization to:

- Bias and discrimination: models trained on biased data can unfairly target certain groups.

- Regulatory fines: non-compliance could mean millions in penalties.

- Reputational damage: one scandal can erode public trust overnight.

And it’s not just regulators watching. Investors want ESG (Environmental, Social, and Governance) alignment. Consumers demand fairness. Governments require transparency. The pressure is coming from every angle.

This is where AI Governance Tools make all the difference. They help organizations identify risks early, track compliance, audit model behavior, and build explainable AI systems that stand up to scrutiny. In short, they turn chaos into control.

AI Risk Types and Governance Responses

| AI Risk Type | Description | Governance Response |

|---|---|---|

| Bias and Discrimination | AI models produce unfair or prejudiced outcomes affecting certain groups. | Bias detection tools, fairness testing, diverse training data, and mitigation strategies. |

| Lack of Explainability | Difficulty in understanding how AI decisions are made. | Explainable AI (XAI) dashboards, model interpretability techniques, and transparent documentation. |

| Regulatory Non-Compliance | Failure to meet legal requirements such as GDPR or the EU AI Act. | Automated compliance monitoring, audit trails, and regulatory reporting features. |

| Data Privacy Violations | Unauthorized use or exposure of sensitive data through AI systems. | Data encryption, access controls, data anonymization, and privacy impact assessments. |

| Model Drift and Degradation | AI models lose accuracy or behave unpredictably over time. | Continuous monitoring, drift detection alerts, and retraining workflows. |

| Security Vulnerabilities | Risks of model tampering, adversarial attacks, or data breaches. | Role-based access controls, robust authentication, and security audits. |

| Operational Risks | Failures in AI deployment are causing downtime or business disruption. | Integration with MLOps, incident response plans, and real-time system monitoring. |

Whether you’re a startup or an enterprise, embracing governance isn’t about slowing down innovation. It’s about building AI that people can trust.

How Do AI Governance Tools Work?

Core Features & Functions

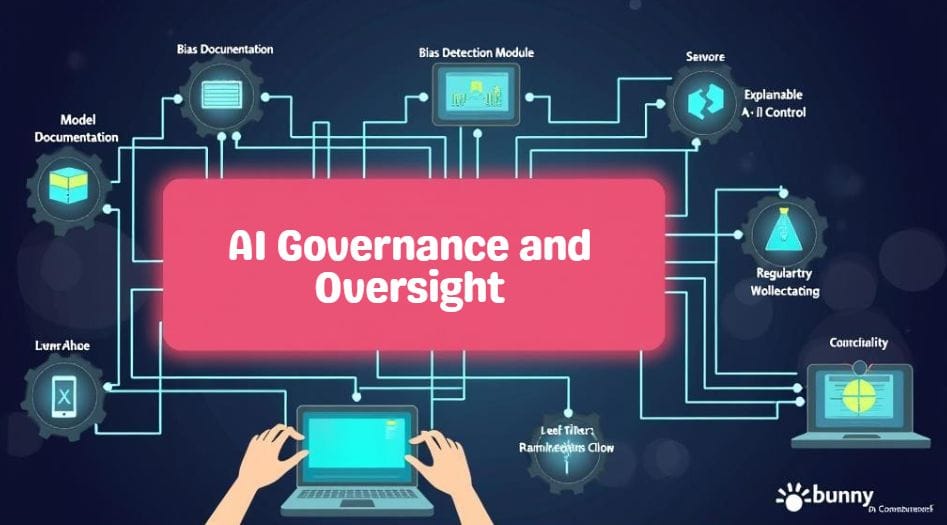

So, how exactly do AI Governance Tools help organizations manage their AI systems responsibly? The magic lies in the layers of functionality that come together to create visibility, accountability, and control over every part of the AI lifecycle.

Let’s break it down.

One of the most foundational features is model documentation and audit trails. These tools automatically log who built the model, how it was trained, what data was used, and when it was updated. That means every decision made by your AI has a digital paper trail—a must-have for transparency and compliance.

Then comes bias detection and fairness testing. AI Governance Tools can scan datasets and model outputs to identify potential biases related to race, gender, age, and more. Think of it as a fairness health check that alerts you before your model causes real-world harm.

Another key function is Explainable AI (XAI). These features turn complex models into understandable visual outputs, allowing both developers and non-technical stakeholders to see why a model made a decision. It’s not just useful—it’s essential for regulatory audits and user trust.

Security is also built in. Through role-based access and control, teams can manage who can view, edit, or deploy models—helping to prevent accidental (or malicious) misuse.

What about staying compliant? Many tools include regulatory reporting dashboards that map AI behaviors to GDPR, the EU AI Act, or internal governance policies—so you’re never caught off guard.

Finally, modern governance platforms are designed to integrate directly with ML pipelines and MLOps platforms. That means governance doesn’t slow down development—it becomes part of the process from day one.

In short, AI Governance Tools act as both watchdog and guide, helping teams innovate boldly while staying firmly on the ethical path.

Top AI Governance Tools in 2025

As AI continues to scale across industries, companies need governance tools they can trust—solutions that go beyond the basics to offer deep visibility, fairness checks, and regulatory alignment. Fortunately, several leading platforms in 2025 have stepped up to meet this demand. Here are the top AI Governance Tools helping organizations build safer, more responsible AI today.

1. IBM AI Governance

IBM has been a long-standing leader in responsible tech, and its AI Governance suite reflects that experience. It offers end-to-end capabilities for:

- Model monitoring: Detects data drift and model decay in real time.

- Fairness tools: Test and mitigate bias before deployment.

- Audit history: Keeps detailed logs of model decisions, training data, and updates for full traceability.

IBM’s governance tools are especially popular in highly regulated sectors like finance and healthcare, where auditability is non-negotiable.

2. Microsoft Responsible AI Dashboard

Microsoft’s Responsible AI Dashboard is an all-in-one toolkit that integrates seamlessly with Azure Machine Learning. It includes:

- Explainability visualizations

- Performance across demographic groups

- Fairness metrics and disparity impact analysis

What sets Microsoft apart is its strong alignment with ethical AI principles and ease of use for both technical and non-technical teams.

3. Google Vertex AI Model Monitoring

Google’s Vertex AI offers robust Model Monitoring that’s tightly woven into its cloud ecosystem. Features include:

- Integrated compliance alerts

- Real-time drift detection

- Customizable fairness checks

Vertex AI excels in scalability and automation, making it ideal for enterprise-level AI deployments across multiple teams and regions.

4. Arthur.ai

Arthur.ai is a fast-growing startup focused entirely on model observability. It’s gaining popularity for:

- Real-time bias and fairness reporting

- Outlier detection

- Actionable insights for model debugging

Arthur’s lightweight and intuitive design makes it a favorite among agile AI teams needing powerful insights without heavy overhead.

5. Fiddler AI

Fiddler AI is all about explainable AI and audit compliance. Its platform offers:

- XAI dashboards that turn black-box models into human-readable stories

- Bias and fairness tracking

- Custom audit templates for industry-specific needs

Fiddler is often used in industries like insurance and HR tech, where explaining AI decisions is essential to end-user trust.

Each of these tools has its strengths, but they all serve a common goal: empowering organizations to deploy AI that’s not just powerful—but also principled, transparent, and safe.

Best Practices for Implementing AI Governance

Practical Tips for Teams & Enterprises

Implementing AI Governance Tools isn’t just about buying software—it’s about building a culture of accountability around your AI systems. Whether you’re a startup experimenting with machine learning or a global enterprise deploying models at scale, following best practices can make or break your governance strategy.

Here’s how to get it right in 2025:

- Start with a recognized framework

Before anything else, choose a reliable governance framework. Standards like NIST’s AI Risk Management Framework, ISO/IEC 42001, or the EU AI Act guidelines offer clear roadmaps for responsible AI development. These act as your north star, helping you define policies, benchmarks, and risk tolerance from the beginning. - Map models to governance workflows

Treat each AI model like a product with its lifecycle. From data sourcing to model retirement, build a governance workflow that includes documentation, bias testing, monitoring, and approvals. This ensures every step is traceable and auditable. - Assign clear roles and responsibilities.

AI governance isn’t just a data science problem. Involve stakeholders across data science, compliance, legal, risk, and IT. Assign specific ownership of tasks like reviewing bias reports, managing access, or updating regulatory compliance logs. - Monitor and audit continuously

Governance isn’t a one-time checklist—it’s ongoing. Use tools that provide real-time monitoring, alert systems, and audit trails to catch issues early and stay ahead of evolving risks. Models change, and so should your oversight. - Collaborate across teams

Break down silos. Encourage cross-functional collaboration between ethics boards, data engineers, product teams, and legal departments. This ensures well-rounded governance that balances innovation with responsibility.

With these best practices in place, you’re not just managing AI—you’re creating a trustworthy foundation that supports innovation while safeguarding users, data, and your company’s reputation.

Frequently Asked Questions

What is the difference between AI governance and AI ethics?

Answer:

AI governance is the structured process of managing, monitoring, and controlling the development and deployment of AI systems to ensure they are transparent, compliant, and safe. It involves tools, policies, and workflows. On the other hand, AI ethics refers to the philosophical principles—like fairness, accountability, and human rights—that inspire those rules. In simple terms, ethics guide what’s right, while governance ensures we do it right.

Are AI governance tools mandatory?

Answer:

AI governance tools are not yet legally required in every country, but global regulations are catching up fast. The EU AI Act, for example, mandates risk classifications and documentation for high-risk AI systems. Similarly, data privacy laws like GDPR and frameworks in the U.S. increasingly call for AI compliance tools to ensure transparency and accountability. So while they may not be mandatory for all businesses today, using them is quickly becoming the safest path forward.

Can small businesses use AI governance tools?

Answer:

Absolutely! You don’t need a Fortune 500 budget to practice responsible AI. There are lightweight AI governance tools and open-source options like Google’s What-If Tool, Fairlearn, and SHAP/Explainable AI modules that are ideal for small teams. These tools help startups detect bias, explain model behavior, and document decision processes without the heavy enterprise overhead. Starting small doesn’t mean compromising on responsibility—it means scaling governance to fit your needs.

How does AI governance integrate with MLOps?

Answer:

MLOps focuses on automating and managing machine learning workflows—from data collection to model deployment. AI governance integrates into MLOps by adding a layer of oversight that ensures ethical and legal standards are met at every stage. This includes logging decisions, tracking bias, controlling access, and creating explainability dashboards. Together, AI governance and MLOps build a robust pipeline that delivers both performance and accountability in every model you ship.

Conclusion

AI governance tools have moved from being a nice-to-have to an absolute must-have. In today’s world, where regulations are tightening and people expect transparency, these tools are the key to deploying AI responsibly and at scale. They help businesses navigate the complex maze of compliance, reduce risks related to bias and errors, and build AI systems that earn users’ trust.

No matter if you’re launching your very first AI project or managing hundreds of models across a global enterprise, investing in AI Governance Tools is a smart, forward-thinking choice. It’s not just about following rules—it’s about creating Responsible AI that drives real value while protecting your company’s reputation.

In the end, strong AI governance is your best defense and your greatest opportunity in the age of artificial intelligence. It turns uncertainty into confidence and risk into resilience—making AI work for everyone’s future.

READ MORE AI GUID: 4 Easy Steps to Use Muke AI: Free AI Writing Tool Guide